What is Screaming Frog?

Screaming Frog SEO Spider is a software allowing to crawl a website, just like Google robots, allowing to extract necessary information to the improvement of SEO. The audits performed by this software are very extensive, from 404 errors, to broken links, through the analysis of meta-descriptions and Title tags, Screaming Frog is certainly one of the best allies of the modern SEO expert.

The price of Screaming Frog, free or paid?

The free version of Screaming Frog allows you to crawl 500 URLs for free. For occasional SEO work, this free option may be sufficient. However, it will be preferable to obtain a Screaming Frog license in order to experience the full power of this software.

How to use a web crawler for SEO?

It is a very versatile tool with many applications. At Rablab, we use Screaming Frog on a daily basis to:

- Create solid transfer plans, containing all the essential information for the migration or redesign of a website

- Perform dynamic Keyword Mapping

- Check page status (200, 404, …)

- Identify broken links

- Identify redirects (301…)

Crawling a website with Screaming Frog

In order to please those wishing to get to the heart of the matter, here is an overview of how an exploration can be done with our favorite tool.

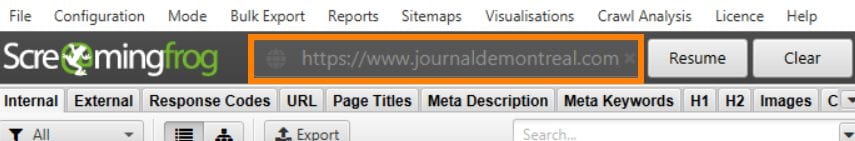

Once the software is open, the process can be as simple as pasting the URL to crawl in the field located right of the logo, and clicking on start:

However, we suggest fine-tuning your settings to ensure a successful crawl!

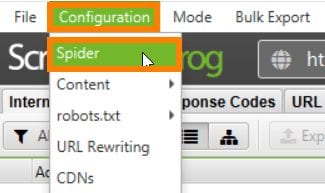

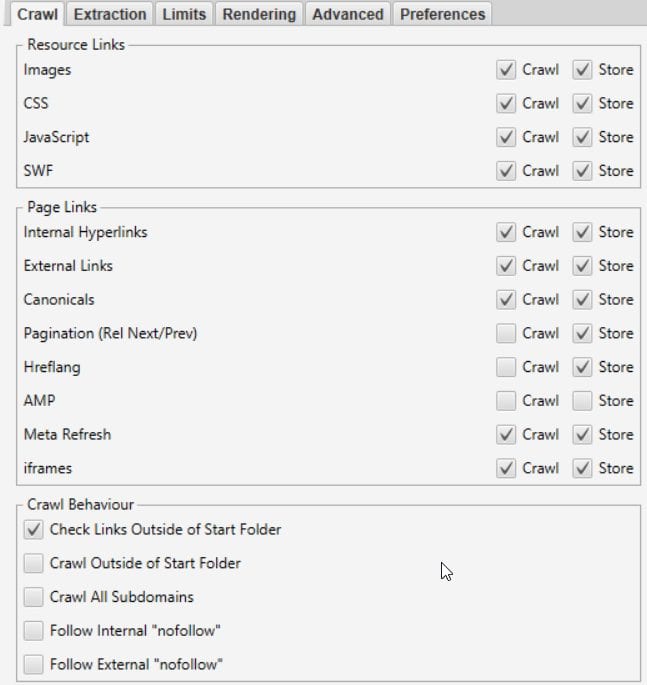

The configuration tab is full of options that will allow you to make decisive adjustments, more specifically the Spider sub-menu option:

The different options and tabs allow you to select precisely the elements that will be explored by Screaming Frog robots (ex: images, PDFs, external links, etc.). Unchecking the less relevant of them can save time in your export and your classification work.

Rablab tips:

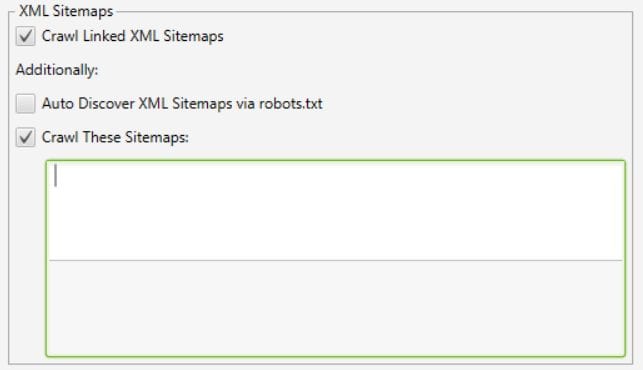

It is very common to crawl a site that does not have its sitemap in the robots.txt, and sometimes, the robots have trouble finding this specific file. Consequently, the final export may not contain all the pages if the interlinking is not done correctly, the orphan pages will certainly be missing.

Our “SEO Pro Tip” of the day is therefore to always insert the sitemap before the crawl, as shown below:

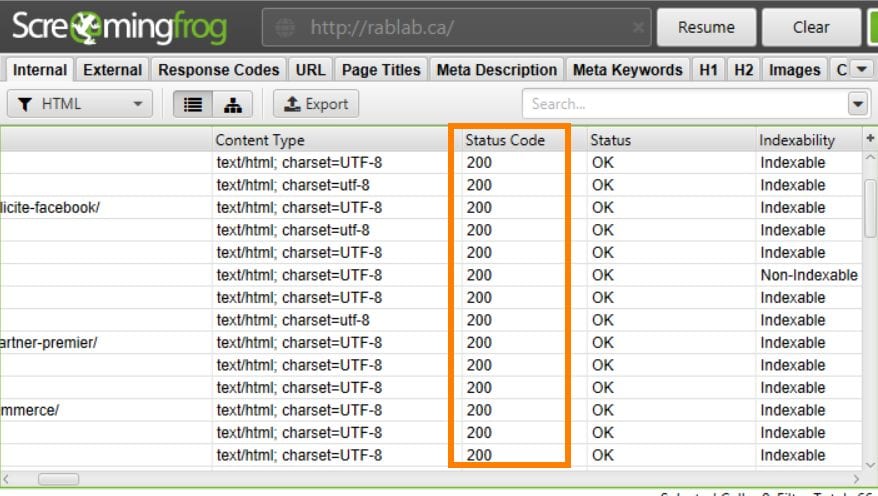

When the exploration work is finally done, and before moving on to the final export, I strongly recommend that you briefly check the status of the pages directly through the Screaming Frog interface:

Indeed, during your numerous explorations, you may notice certain irregularity, such as the 429 page status (Too Many Requests).

What is a 429 code you will ask?

As described by Mozilla in this article on status code 429, this response returned by the server indicates that the user has made too many requests in a given amount of time. Usually, this is a security measure on the server side, in order to protect the website against malicious attacks and to avoid a potential server crash.

In order to solve this problem, there are a few solutions at our disposal:

- Reduce the crawling speed (number of pages crawled per second)

- Use another “User Agent”

Reduce exploration speed

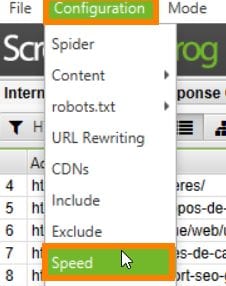

To reduce the number of requests per second in order not to overload the server and to avoid activating potential protection, select from the menu Configuration> Speed.

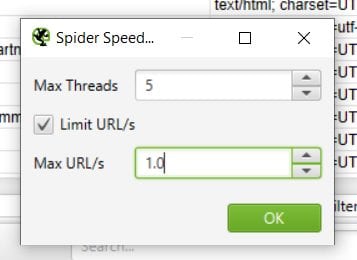

In this new window, just decrease the maximum number of URLs per second, as seen in this screenshot:

It is also recommended taking a look at the robots.txt file to see if it contains a crawl delay. With this in mind, it may be wise to act accordingly.

Exploration will take a little longer, but you will now be able to relaunch your exploration and enjoy an error-free export! As a colleague often repeats, you will now be able to enjoy (of a complete and quality export).

Use another User Agent

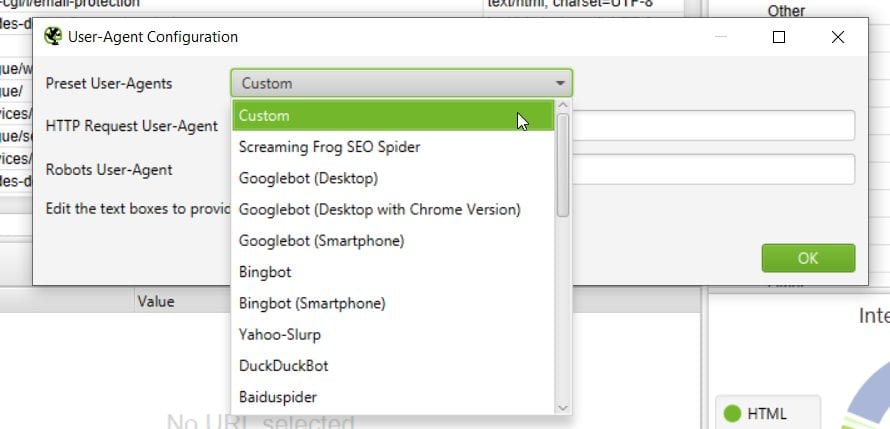

A function that is sometimes underestimated, the option of changing User Agent is very useful and relevant when you spot errors (type 429 in particular).

Briefly, and in order to avoid any misunderstanding, a User Agent is simply the type of crawler used. For example, the best known of these is undoubtedly Googlebot, the famous Google web crawler that scours the web to list sites around the world. We can also distinguish two types of crawler: Googlebot Desktop and Googlebot Smartphone. If you notice in your Google Search Console that your site is indexed “mobile first”, it means that Googlebot Smartphone is the crawler that crawled your website. If you want to learn more about the amazing and useful Google Search Console, and how to use it wisely to improve your SEO, I suggest you check out our article on GSC.

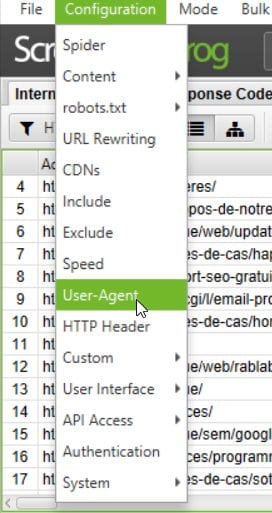

For the User Agent, this is again accessible from the Configuration menu, then User-Agent:

As you will see, by default, Screaming Frog uses its own User Agent; if this option is left by default, you will therefore explore the URLs with its proprietary crawler. However, you are offered the option of crawling with Googlebot, Bingbot, DuckDuckBot and many others. It is interesting to vary the User Agents in order to observe the differences in explorations that may result.

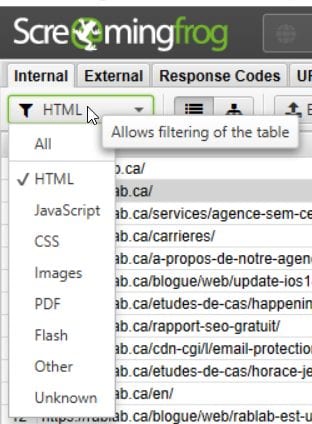

When you are ready to export all this data, you can choose the items you want to include in the export:

You are free to choose the method that best suits the situation, but generally a “raw export” including all the data is never too much, especially in the case of a transfer.

The Broken Links with Screaming Frog

As you can see, Screaming Frog offers vast boundless possibilities. It is also an excellent tool to identify at lightning speed your broken links (one of the great enemies of the SEO specialist). We have also published an excellent article dedicated to 404 errors, and how to fix them; highly recommended reading!

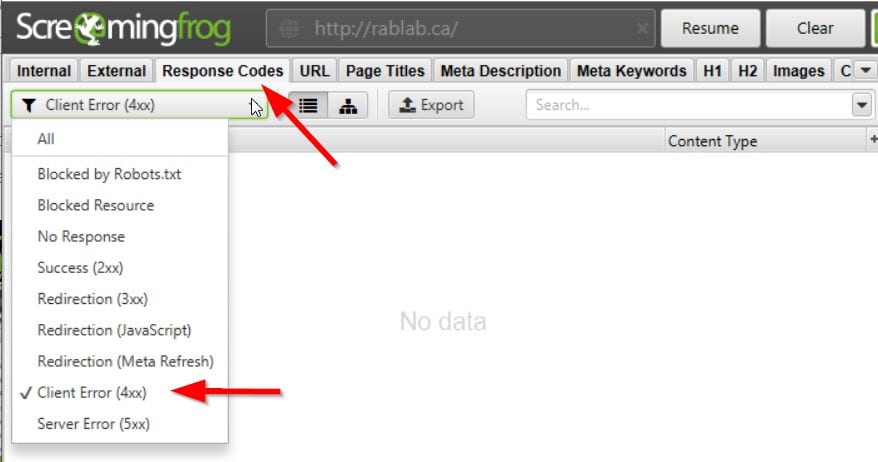

Once the crawl is done (or even during a live crawl!), Simply go to the “Response Codes” tab and select “Client Error (4xx)” from the drop-down menu.

Of course, the example with Rablab.ca above shows no error. We are specialists after all …

But how do you know where the broken link is located?

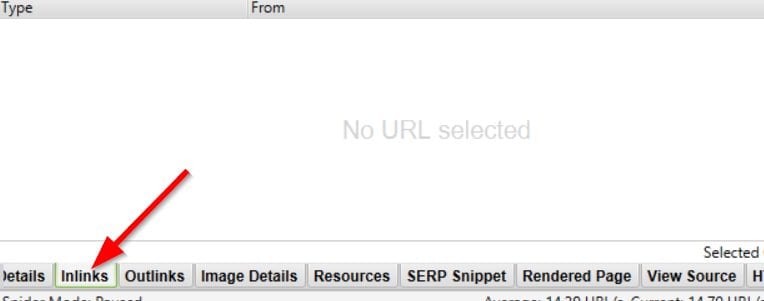

At the bottom of the window, go to the “Inlinks” tab and inspect the affected pages.

This Screaming Frog preview only scratches the surface. The options are endless, and the possible applications just as numerous.

Do you remain overwhelmed despite this gentle introduction?

No worries, just contact our team of experts. We will be happy to get our hands on the User Agent, Speed Crawl and other technical options for you. Do you need complete support for your digital marketing strategy ? Discover all of Rablab’s services: SEO, SEM, SMM and Programmatic.